@article{liu2024dynamem,

title={DynaMem: Online Dynamic Spatio-Semantic Memory for Open World Mobile Manipulation},

author={Liu, Peiqi and Guo, Zhanqiu and Warke, Mohit and Chintala, Soumith and Shafiullah, Nur Muhammad Mahi and Pinto, Lerrel},

journal={arXiv preprint arXiv:2411.04999},

year={2024}

}

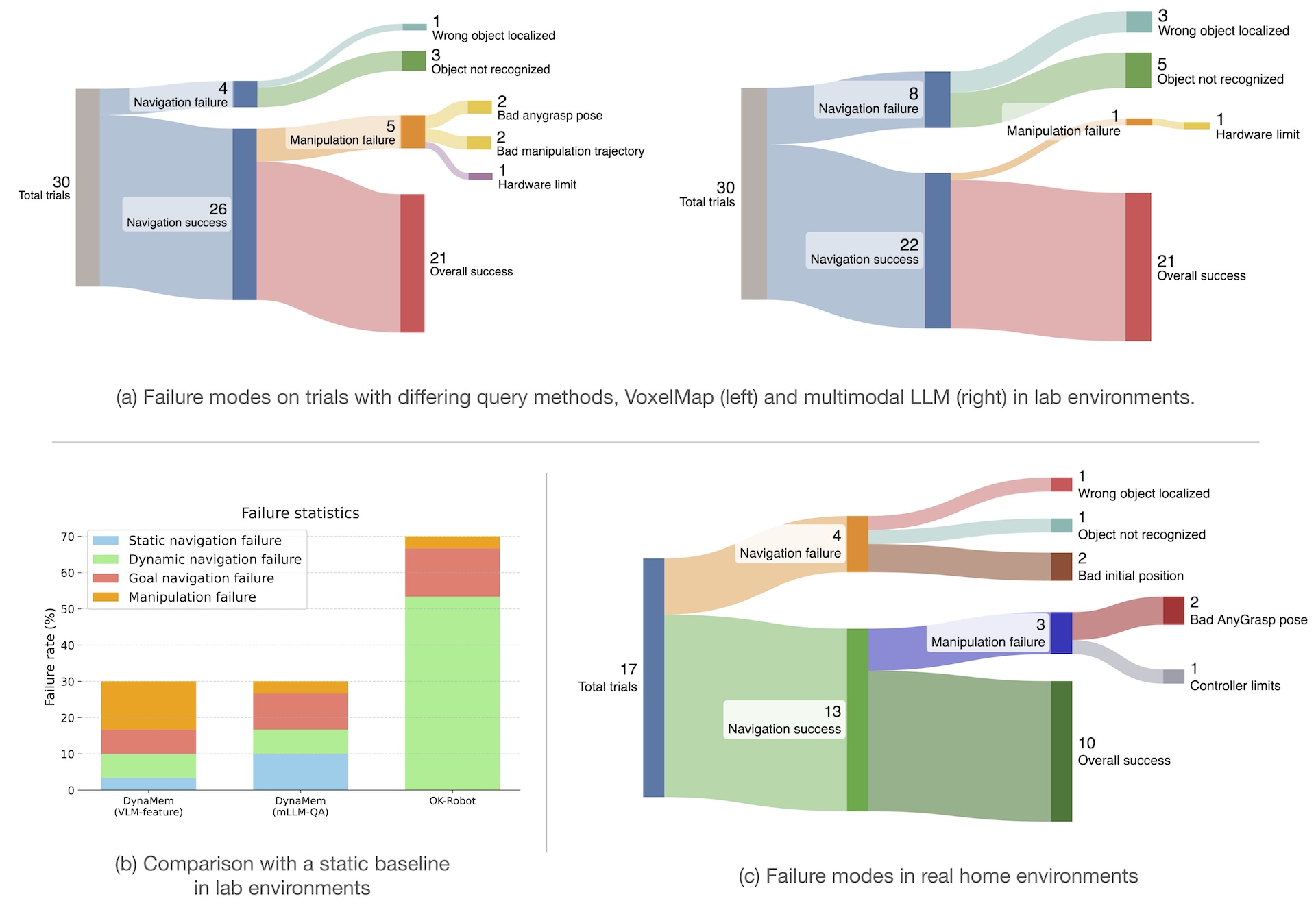

Method

Illustration of DynaMem

We maintain a feature pointcloud as the robot memory. When the robot receives a new RGBD observation of the environment, it adds the newly observed objects and removes the points no longer existing.

To ground the object of interest described by the text query, the robot locates the point most similar to text query along with the last image it is observed. If the text is grounded in the image or the point has high similarity with the text, it will be considered as the location of the object of interest.

If the text is grounded the environment, the robot will navigate to the target object; otherwise, the robot memory will be projected into a value map and the robot explores the environment based on the value map.